Prepare to deploy Amazon Web Services (AWS) web application workload to Azure

This article provides a comprehensive guide on how to deploy a robust and production-ready infrastructure to facilitate the hosting, protection, scaling, and monitoring of a web application on the Azure platform.

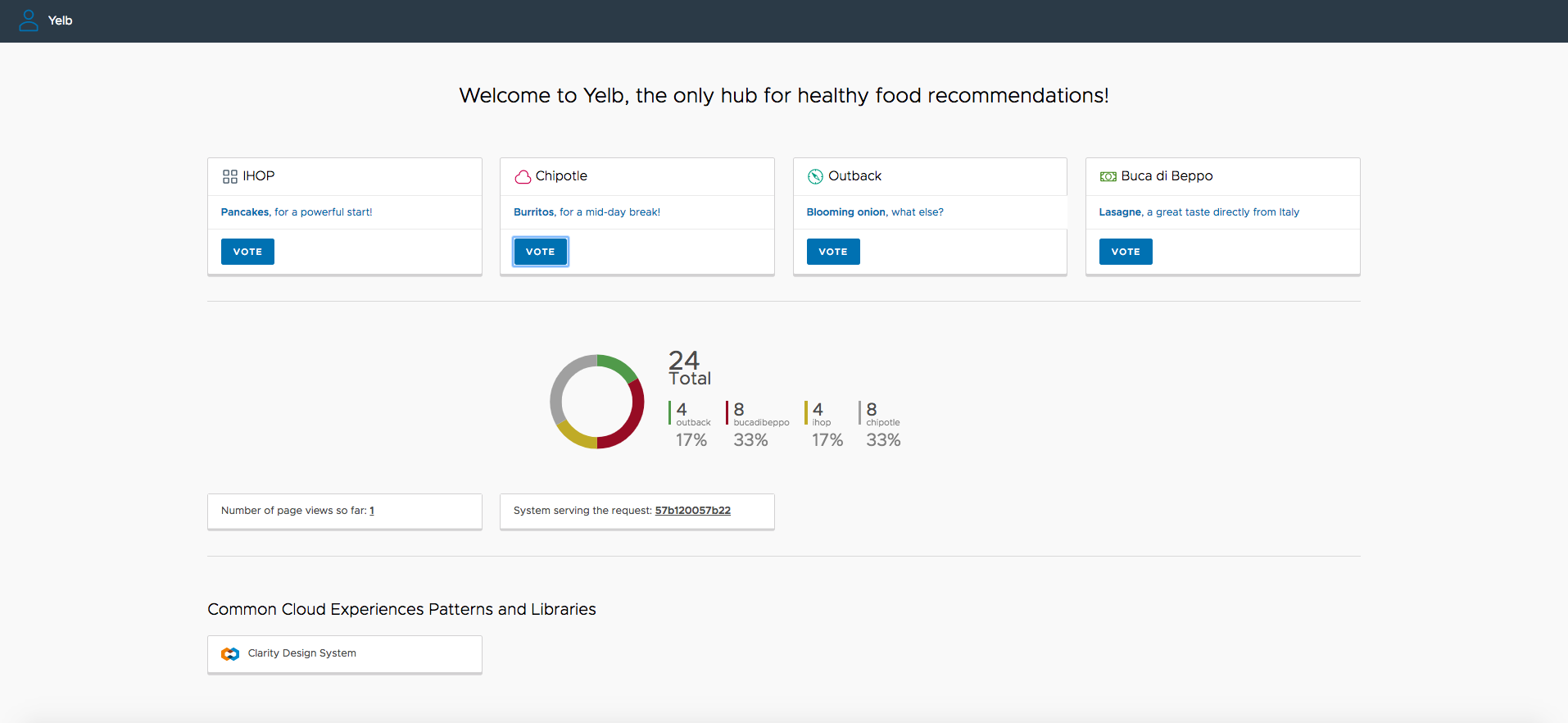

Yelb deployment on AWS

The Yelb sample web application on AWS is deployed using Bash, AWS CLI, eksctl, kubectl, and Helm. The companion sample contains Bash scripts and YAML manifests that you can use to automate the deployment of the Yelb application on AWS Elastic Kubernetes Service (EKS). This solution demonstrates how to implement a web application firewall using AWS WAF to protect a web application running on Amazon Elastic Kubernetes Service (EKS). You can use the Bash scripts to create an EKS cluster and deploy the Yelb application. The Yelb web application is exposed to the public internet using an Amazon Application Load Balancer (ALB) and protected using AWS WAF web access control list (web ACL). For detailed instructions, see Porting a Web Application from AWS Elastic Kubernetes Service (EKS) to Azure Kubernetes Service (AKS).

Yelb deployment on Azure

In the following sections, you learn how to deploy the Yelb sample web application on an Azure Kubernetes Service (AKS) cluster and expose it through an ingress controller like the NGINX ingress controller. The ingress controller service is accessible via an internal (or private) load balancer, which balances traffic within the virtual network housing the AKS cluster. In a hybrid scenario, the load balancer frontend can be accessed from an on-premises network. To learn more about internal load balancing, see Use an internal load balancer with Azure Kubernetes Service (AKS).

The companion sample supports installing a managed NGINX ingress controller with the application routing add-on or an unmanaged NGINX ingress controller using the Helm chart. The application routing add-on with NGINX ingress controller provides the following features:

- Easy configuration of managed NGINX ingress controllers based on Kubernetes NGINX ingress controller.

- Integration with Azure DNS for public and private zone management.

- SSL termination with certificates stored in Azure Key Vault.

For other configurations,

- DNS and SSL configuration

- Application routing add-on configuration

- Configure internal NGIX ingress controller for Azure private DNS zone.

To enhance security, the Yelb application is protected by an Azure Application Gateway resource. This resource is deployed in a dedicated subnet within the same virtual network as the AKS cluster or in a peered virtual network. The Azure Web Application Firewall (WAF) secures access to the web application hosted on Azure Kubernetes Service (AKS) and exposed via the Azure Application Gateway against common exploits and vulnerabilities.

Prerequisites

- An active Azure subscription. If you don't have one, create a free Azure account before you begin.

- The Owner Azure built-in role, or the User Access Administrator and Contributor built-in roles, on a subscription in your Azure account.

- Azure CLI version 2.61.0 or later. For more information, see Install Azure CLI.

- Azure Kubernetes Service (AKS) preview extension.

- jq version 1.5 or later.

- Python 3 or later.

- kubectl version 1.21.0 or later

- Helm version 3.0.0 or later

- Visual Studio Code installed on one of the supported platforms along with the Bicep extension.

- An existing Azure Key Vault resource with a valid TLS certificate for the Yelb web application.

- An existing Azure DNS Zone or equivalent DNS server for the name resolution of the Yelb application.

Architecture

This sample provides a collection of Bicep templates, Bash scripts, and YAML manifests for building an AKS cluster, deploying the Yelb application, exposing the UI service using the NGINX ingress controller, and protecting it with the Azure Application Gateway and Azure Web Application Firewall (WAF).

This sample also includes two separate Bicep parameter files and two sets of Bash scripts and YAML manifests, each geared towards deploying two different solution options. For more information on Bicep, see What is Bicep?

TLS termination at the Application Gateway and Yelb invocation via HTTP

In this solution, the Azure Web Application Firewall (WAF) ensures the security of the system by blocking malicious attacks. The Azure Application Gateway receives incoming calls from client applications, performs TLS termination, and forwards the requests to the AKS-hosted yelb-ui service. This communication is achieved through the internal load balancer and NGINX ingress controller using the HTTP transport protocol. The following diagram illustrates the architecture:

The message flow is as follows:

- The Azure Application Gateway handles TLS termination and sends incoming calls to the AKS-hosted

yelb-uiservice over HTTP. - The Application Gateway Listener uses an SSL certificate obtained from Azure Key Vault to ensure secure communication.

- The Azure WAF Policy associated with the Listener applies OWASP rules and custom rules to incoming requests, effectively preventing many types of malicious attacks.

- The Application Gateway Backend HTTP Settings invoke the Yelb application via HTTP using port 80.

- The Application Gateway Backend Pool and Health Probe call the NGINX ingress controller through the AKS internal load balancer using the HTTP protocol for traffic management.

- The NGINX ingress controller uses the AKS internal load balancer to ensure secure communication within the cluster.

- A Kubernetes ingress object uses the NGINX ingress controller to expose the application via HTTP through the internal load balancer.

- The

yelb-uiservice with theClusterIPtype restricts its invocation to within the cluster or through the NGINX ingress controller.

Implementing end-to-end TLS using Azure Application Gateway

TLS termination

Azure Application Gateway supports TLS termination at the gateway level, which means that traffic is decrypted at the gateway before being sent to the backend servers. To configure TLS termination, you need to add a TLS/SSL certificate to the listener. The certificate should be in Personal Information Exchange (PFX) format, which contains both the private and public keys. You can import the certificate from Azure Key Vault to the Application Gateway. For more information, see TLS termination with Key Vault certificates.

Zero Trust security model

If you adopt a Zero Trust security model, you should prevent unencrypted communication between a service proxy like Azure Application Gateway and the backend servers. With the Zero Trust security model, trust isn't automatically granted to any user or device trying to access resources within a network. Instead, it requires continuous verification of identity and authorization for each request, regardless of the user's location or network. In our scenario, implementing the Zero Trust security model involves using the Azure Application Gateway as a service proxy, which acts as a front-end for incoming requests. These requests then travel down to the NGINX ingress controller on Azure Kubernetes Service (AKS) in an encrypted format.

End-to-end TLS with Application Gateway

You can implement a Zero Trust approach by configuring Azure Application Gateway for end-to-end TLS encryption with the backend servers. End-to-end TLS encryption allows you to securely transmit sensitive data to the backend while leveraging Application Gateway's layer 7 load balancing features, including cookie-based session affinity, URL-based routing, routing based on sites, and the ability to rewrite or inject X-Forwarded-* headers.

When Application Gateway is configured with end-to-end TLS communication mode, it terminates the TLS sessions at the gateway and decrypts user traffic. It then applies the configured rules to select the appropriate backend pool instance to route the traffic to. Next, Application Gateway initiates a new TLS connection to the backend server and reencrypts the data using the backend server's public key certificate before transmitting the request to the backend. The response from the web server follows the same process before reaching the end user. To enable end-to-end TLS, you need to set the protocol setting in the Backend HTTP Setting to HTTPS and apply it to a backend pool. This approach ensures that your communication with the backend servers is secured and compliant with your requirements.

For more information, see Application Gateway end-to-end TLS encryption and Best practices for securing your Application Gateway.

In this solution, the Azure Web Application Firewall (WAF) ensures the security of the system by blocking malicious attacks. The Azure Application Gateway handles incoming calls from client applications, performs TLS termination, and implements end-to-end TLS by invoking the underlying AKS-hosted yelb-ui service using the HTTPS transport protocol via the internal load balancer and NGINX ingress controller. The following diagram illustrates the architecture:

The message flow is as follows:

- The Azure Application Gateway handles TLS termination and communicates with the backend application over HTTPS.

- The Application Gateway Listener uses an SSL certificate obtained from Azure Key Vault.

- The Azure WAF Policy associated with the Listener runs OWASP rules and custom rules against incoming requests to block malicious attacks.

- The Application Gateway Backend HTTP Settings are configured to invoke the AKS-hosted

yelb-uiservice via HTTPS on port 443. - The Application Gateway Backend Pool and Health Probe call the NGINX ingress controller through the AKS internal load balancer using HTTPS.

- The NGINX ingress controller is deployed to use the AKS internal load balancer.

- The SAKS cluster is configured with the Azure Key Vault provider for Secrets Store CSI Driver add-on to retrieve secrets, certificates, and keys from Azure Key Vault via a CSI volume.

- A SecretProviderClass is used to retrieve the certificate used by the Application Gateway from Key Vault.

- A Kubernetes ingress object uses the NGINX ingress controller to expose the application via HTTPS through the AKS internal load balancer.

- The

yelb-uiservice has aClusterIPtype, which restricts its invocation to within the cluster or through the NGINX ingress controller.

To help ensure the security and stability of the system, consider the following recommendations:

- Regularly update the Azure WAF Policy with the latest rules to ensure optimal security.

- Implement monitoring and logging mechanisms to track and analyze incoming requests and potential attacks.

- Regularly perform maintenance and updates of the AKS cluster, NGINX ingress controller, and Application Gateway to address any security vulnerabilities and maintain a secure infrastructure.

- Implement monitoring and logging mechanisms to track and analyze incoming requests and potential attacks.

- Regularly perform maintenance and updates of the AKS cluster, NGINX ingress controller, and Application Gateway to address any security vulnerabilities and maintain a secure infrastructure.

Hostname

The Application Gateway Listener and the Kubernetes ingress are configured to use the same hostname. It's important to use the same hostname for a service proxy and a backend web application for the following reasons:

- Preservation of session state: When you use a different hostname for the proxy and the backend application, session state can get lost. This means that user sessions might not persist properly, resulting in a poor user experience and potential loss of data.

- Authentication failure: If the hostname differs between the proxy and the backend application, authentication mechanisms might fail. This approach can lead to users being unable to log in or access secure resources within the application.

- Inadvertent exposure of URLs: If the hostname isn't preserved, there's a risk that backend URLs might be exposed to end users. This can lead to potential security vulnerabilities and unauthorized access to sensitive information.

- Cookie issues: Cookies play a crucial role in maintaining user sessions and passing information between the client and the server. When the hostname differs, cookies might not work as expected, leading to issues such as failed authentication, improper session handling, and incorrect redirection.

- End-to-end TLS/SSL requirements: If end-to-end TLS/SSL is required for secure communication between the proxy and the backend service, a matching TLS certificate for the original hostname is necessary. Using the same hostname simplifies the certificate management process and ensures that secure communication is established seamlessly.

You can avoid these problems by using the same hostname for the service proxy and the backend web application. The backend application sees the same domain as the web browser, ensuring that session state, authentication, and URL handling function correctly.

Message Flow

The following diagram shows the steps for the message flow during deployment and runtime.

Deployment workflow

The following steps describe the deployment process. This workflow corresponds to the green numbers in the preceding diagram.

- A security engineer generates a certificate for the custom domain that the workload uses, and saves it in an Azure key vault. You can obtain a valid certificate from a well-known certification authority (CA).

- A platform engineer specifies the necessary information in the main.bicepparams Bicep parameters file and deploys the Bicep templates to create the Azure resources. The necessary information includes:

- A prefix for the Azure resources.

- The name and resource group of the existing Azure Key Vault that holds the TLS certificate for the workload hostname and the Application Gateway listener custom domain.

- You can configure the deployment script to install the following packages to your AKS cluster. For more information, check the parameters section of the Bicep module.

- Prometheus and Grafana using the Prometheus community Kubernetes Helm charts: By default, this sample configuration doesn't install Prometheus and Grafana to the AKS cluster. Instead, it installs Azure Managed Prometheus and Azure Managed Grafana.

- cert-manager: Certificate Manager isn't necessary in this sample, as both the Application Gateway and NGINX ingress controller use a pre-uploaded TLS certificate from Azure Key Vault.

- NGINX ingress controller via a Helm chart: If you use the managed NGINX ingress controller with the application routing add-on, you don't need to install another instance of the NGINX ingress controller via Helm.

- The Application Gateway Listener retrieves the TLS certificate from Azure Key Vault.

- The Kubernetes ingress object uses the certificate obtained from the Azure Key Vault provider for Secrets Store CSI Driver to expose the Yelb UI service via HTTPS.

- The Application Gateway Listener retrieves the TLS certificate from Azure key Vault.

- The Kubernetes ingress object utilizes the certificate obtained from the Azure Key Vault provider for Secrets Store CSI Driver to expose the Yelb UI service via HTTPS.

Runtime workflow

- The client application calls the sample web application using its hostname. The DNS zone associated with the custom domain of the Application Gateway Listener uses an

Arecord to resolve the DNS query with the address of the Azure public IP used by the front-end IP configuration of the Application Gateway. - The request is sent to the Azure public IP used by the front-end IP configuration of the Application Gateway.

- The Application Gateway performs the following actions:

- The Application Gateway handles TLS termination and communicates with the backend application over HTTPS.

- The Application Gateway Listener uses an SSL certificate obtained from Azure Key Vault.

- The Azure WAF Policy associated to the Listener runs OWASP rules and custom rules against the incoming request and blocks malicious attacks.

- The Application Gateway Backend HTTP Settings are configured to invoke the sample web application via HTTPS on port 443.

- The Application Gateway Backend Pool calls the NGINX ingress controller through the AKS internal load balancer using HTTPS.

- The request is sent to one of the agent nodes that hosts a pod of the NGINX ingress controller.

- One of the NGINX ingress controller replicas handles the request and sends the request to one of the service endpoints of the

yelb-uiservice. - The

yelb-uicalls theyelb-appserverservice. - The

yelb-appservercalls theyelb-dbandyelb-cacheservices. - The

yelb-uicalls theyelb-appserverservice. - The

yelb-appservercalls theyelb-dbandyelb-cacheservices.

Deployment

By default, Bicep templates install the AKS cluster with the Azure CNI Overlay network plugin and the Cilium data plane. You can use an alternative network plugin. Additionally, the project shows how to deploy an AKS cluster with the following extensions and features:

- Microsoft Entra Workload ID

- Istio-based service mesh add-on

- API Server VNET integration

- Azure NAT Gateway

- Event-driven autoscaling (KEDA) add-on

- Dapr extension

- Flux V2 extension

- Vertical Pod Autoscaling

- Azure Key Vault Provider for Secrets Store CSI Driver

- Image Cleaner

- Azure Kubernetes Service (AKS) Network Observability

- Managed NGINX ingress with the application routing add-on

In a production environment, we strongly recommend deploying a private AKS cluster with Uptime SLA. For more information, see private AKS cluster with a public DNS address.

Alternatively, you can deploy a public AKS cluster and secure access to the API server using authorized IP address ranges. For detailed information and instructions on how to deploy the infrastructure on Azure using Bicep templates, see the companion Azure code sample.

In a production environment, we strongly recommend deploying a private AKS cluster with Uptime SLA. For more information, see private AKS cluster with a Public DNS address. Alternatively, you can deploy a public AKS cluster and secure access to the API server using authorized IP address ranges. For detailed information and instructions on how to deploy the infrastructure on Azure using Bicep templates, refer to the companion Azure code sample.

Next step

Contributors

Microsoft maintains this article. The following contributors originally wrote it:

Principal author:

- Paolo Salvatori | Principal Customer Engineer

Other contributors:

- Ken Kilty | Principal TPM

- Russell de Pina | Principal TPM

- Erin Schaffer | Content Developer 2

Azure Kubernetes Service