Quickstart: Get started using chat completions with Azure OpenAI Service

Use this article to get started using Azure OpenAI.

Prerequisites

- An Azure subscription - Create one for free.

- An Azure OpenAI Service resource with either

gpt-4oor thegpt-4o-minimodels deployed. We recommend using standard or global standard model deployment types for initial exploration. For more information about model deployment, see the resource deployment guide.

Go to Azure AI Foundry

Navigate to the Azure AI Foundry portal and sign-in with credentials that have access to your Azure OpenAI resource. During or after the sign-in workflow, select the appropriate directory, Azure subscription, and Azure OpenAI resource.

From Azure AI Foundry, select Chat playground.

Playground

Start exploring Azure OpenAI Service capabilities with a no-code approach through the Azure AI Foundry Chat playground. From this page, you can quickly iterate and experiment with the capabilities.

Setup

You can use the *Prompt samples dropdown to select a few pre-loaded System message examples to get started.

System messages give the model instructions about how it should behave and any context it should reference when generating a response. You can describe the assistant's personality, tell it what it should and shouldn't answer, and tell it how to format responses.

At any time while using the Chat playground you can select View code to see Python, curl, and json code samples pre-populated based on your current chat session and settings selections. You can then take this code and write an application to complete the same task you're currently performing with the playground.

Chat session

Selecting the Enter button or selecting the right arrow icon sends the entered text to the chat completions API and the results are returned back to the text box.

Select the Clear chat button to delete the current conversation history.

Key settings

| Name | Description |

|---|---|

| Deployments | Your deployment name that is associated with a specific model. |

| Add your data | |

| Parameters | Custom parameters that alter the model responses. When you are starting out we recommend to use the defaults for most parameters |

| Temperature | Controls randomness. Lowering the temperature means that the model produces more repetitive and deterministic responses. Increasing the temperature results in more unexpected or creative responses. Try adjusting temperature or Top P but not both. |

| Max response (tokens) | Set a limit on the number of tokens per model response. The API on the latest models supports a maximum of 128,000 tokens shared between the prompt (including system message, examples, message history, and user query) and the model's response. One token is roughly four characters for typical English text. |

| Top p | Similar to temperature, this controls randomness but uses a different method. Lowering Top P narrows the model’s token selection to likelier tokens. Increasing Top P lets the model choose from tokens with both high and low likelihood. Try adjusting temperature or Top P but not both. |

| Stop sequences | Stop sequence make the model end its response at a desired point. The model response ends before the specified sequence, so it won't contain the stop sequence text. For GPT-35-Turbo, using <|im_end|> ensures that the model response doesn't generate a follow-up user query. You can include as many as four stop sequences. |

View code

Once you have experimented with chatting with the model select the </> View Code button. This will give you a replay of the code behind your entire conversation so far:

Understanding the prompt structure

If you examine the sample from View code you'll notice that the conversation is broken into three distinct roles system, user, assistant. Each time you message the model the entire conversation history up to that point is resent. When using the chat completions API the model has no true memory of what you have sent to it in the past so you provide the conversation history for context to allow the model to respond properly.

The Chat completions how-to guide provides an in-depth introduction into the new prompt structure and how to use chat completions models effectively.

Deploy your model

Once you're satisfied with the experience, you can deploy a web app directly from the portal by selecting the Deploy to button.

This gives you the option to either deploy to a standalone web application, or a copilot in Copilot Studio (preview) if you're using your own data on the model.

As an example, if you choose to deploy a web app:

The first time you deploy a web app, you should select Create a new web app. Choose a name for the app, which will

become part of the app URL. For example, https://<appname>.azurewebsites.net.

Select your subscription, resource group, location, and pricing plan for the published app. To update an existing app, select Publish to an existing web app and choose the name of your previous app from the dropdown menu.

If you choose to deploy a web app, see the important considerations for using it.

Clean up resources

Once you're done testing out the Chat playground, if you want to clean up and remove an Azure OpenAI resource, you can delete the resource or resource group. Deleting the resource group also deletes any other resources associated with it.

Next steps

- Learn more about how to work with the new

gpt-35-turbomodel with the GPT-35-Turbo & GPT-4 how-to guide. - For more examples check out the Azure OpenAI Samples GitHub repository

Source code | Package (NuGet) | Samples| Retrieval Augmented Generation (RAG) enterprise chat template |

Prerequisites

- An Azure subscription - Create one for free

- The .NET 7 SDK

- An Azure OpenAI Service resource with the

gpt-4omodel deployed. For more information about model deployment, see the resource deployment guide.

Microsoft Entra ID prerequisites

For the recommended keyless authentication with Microsoft Entra ID, you need to:

- Install the Azure CLI used for keyless authentication with Microsoft Entra ID.

- Assign the

Cognitive Services Userrole to your user account. You can assign roles in the Azure portal under Access control (IAM) > Add role assignment.

Set up

Create a new folder

chat-quickstartand go to the quickstart folder with the following command:mkdir chat-quickstart && cd chat-quickstartCreate a new console application with the following command:

dotnet new consoleInstall the OpenAI .NET client library with the dotnet add package command:

dotnet add package Azure.AI.OpenAI --prereleaseFor the recommended keyless authentication with Microsoft Entra ID, install the Azure.Identity package with:

dotnet add package Azure.IdentityFor the recommended keyless authentication with Microsoft Entra ID, sign in to Azure with the following command:

az login

Retrieve resource information

You need to retrieve the following information to authenticate your application with your Azure OpenAI resource:

| Variable name | Value |

|---|---|

AZURE_OPENAI_ENDPOINT |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. |

AZURE_OPENAI_DEPLOYMENT_NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Model Deployments in the Azure portal. |

OPENAI_API_VERSION |

Learn more about API Versions. You can change the version in code or use an environment variable. |

Learn more about keyless authentication and setting environment variables.

Run the quickstart

The sample code in this quickstart uses Microsoft Entra ID for the recommended keyless authentication. If you prefer to use an API key, you can replace the DefaultAzureCredential object with an AzureKeyCredential object.

AzureOpenAIClient openAIClient = new AzureOpenAIClient(new Uri(endpoint), new DefaultAzureCredential());

You can use streaming or non-streaming to get the chat completion. The following code examples show how to use both methods. The first example shows how to use the non-streaming method, and the second example shows how to use the streaming method.

Without response streaming

To run the quickstart, follow these steps:

Replace the contents of

Program.cswith the following code and update the placeholder values with your own.using Azure; using Azure.Identity; using OpenAI.Assistants; using Azure.AI.OpenAI; using OpenAI.Chat; using static System.Environment; string endpoint = Environment.GetEnvironmentVariable("AZURE_OPENAI_ENDPOINT") ?? "https://<your-resource-name>.openai.azure.com/"; string key = Environment.GetEnvironmentVariable("AZURE_OPENAI_API_KEY") ?? "<your-key>"; // Use the recommended keyless credential instead of the AzureKeyCredential credential. AzureOpenAIClient openAIClient = new AzureOpenAIClient(new Uri(endpoint), new DefaultAzureCredential()); //AzureOpenAIClient openAIClient = new AzureOpenAIClient(new Uri(endpoint), new AzureKeyCredential(key)); // This must match the custom deployment name you chose for your model ChatClient chatClient = openAIClient.GetChatClient("gpt-4o"); ChatCompletion completion = chatClient.CompleteChat( [ new SystemChatMessage("You are a helpful assistant that talks like a pirate."), new UserChatMessage("Does Azure OpenAI support customer managed keys?"), new AssistantChatMessage("Yes, customer managed keys are supported by Azure OpenAI"), new UserChatMessage("Do other Azure AI services support this too?") ]); Console.WriteLine($"{completion.Role}: {completion.Content[0].Text}");Run the application with the following command:

dotnet run

Output

Assistant: Arrr, ye be askin’ a fine question, matey! Aye, several Azure AI services support customer-managed keys (CMK)! This lets ye take the wheel and secure yer data with encryption keys stored in Azure Key Vault. Services such as Azure Machine Learning, Azure Cognitive Search, and others also offer CMK fer data protection. Always check the specific service's documentation fer the latest updates, as features tend to shift swifter than the tides, aye!

This will wait until the model has generated its entire response before printing the results. Alternatively, if you want to asynchronously stream the response and print the results, you can replace the contents of Program.cs with the code in the next example.

Async with streaming

To run the quickstart, follow these steps:

Replace the contents of

Program.cswith the following code and update the placeholder values with your own.using Azure; using Azure.Identity; using OpenAI.Assistants; using Azure.AI.OpenAI; using OpenAI.Chat; using static System.Environment; string endpoint = Environment.GetEnvironmentVariable("AZURE_OPENAI_ENDPOINT") ?? "https://<your-resource-name>.openai.azure.com/"; string key = Environment.GetEnvironmentVariable("AZURE_OPENAI_API_KEY") ?? "<your-key>"; // Use the recommended keyless credential instead of the AzureKeyCredential credential. AzureOpenAIClient openAIClient = new AzureOpenAIClient(new Uri(endpoint), new DefaultAzureCredential()); //AzureOpenAIClient openAIClient = new AzureOpenAIClient(new Uri(endpoint), new AzureKeyCredential(key)); // This must match the custom deployment name you chose for your model ChatClient chatClient = openAIClient.GetChatClient("gpt-4o"); var chatUpdates = chatClient.CompleteChatStreamingAsync( [ new SystemChatMessage("You are a helpful assistant that talks like a pirate."), new UserChatMessage("Does Azure OpenAI support customer managed keys?"), new AssistantChatMessage("Yes, customer managed keys are supported by Azure OpenAI"), new UserChatMessage("Do other Azure AI services support this too?") ]); await foreach(var chatUpdate in chatUpdates) { if (chatUpdate.Role.HasValue) { Console.Write($"{chatUpdate.Role} : "); } foreach(var contentPart in chatUpdate.ContentUpdate) { Console.Write(contentPart.Text); } }Run the application with the following command:

dotnet run

Output

Assistant: Arrr, ye be askin’ a fine question, matey! Aye, several Azure AI services support customer-managed keys (CMK)! This lets ye take the wheel and secure yer data with encryption keys stored in Azure Key Vault. Services such as Azure Machine Learning, Azure Cognitive Search, and others also offer CMK fer data protection. Always check the specific service's documentation fer the latest updates, as features tend to shift swifter than the tides, aye!

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- Get started with the chat using your own data sample for .NET

- For more examples, check out the Azure OpenAI Samples GitHub repository

Source code | Package (Go)| Samples

Prerequisites

- An Azure subscription - Create one for free

- Go 1.21.0 or higher installed locally.

- An Azure OpenAI Service resource with the

gpt-4model deployed. For more information about model deployment, see the resource deployment guide.

Microsoft Entra ID prerequisites

For the recommended keyless authentication with Microsoft Entra ID, you need to:

- Install the Azure CLI used for keyless authentication with Microsoft Entra ID.

- Assign the

Cognitive Services Userrole to your user account. You can assign roles in the Azure portal under Access control (IAM) > Add role assignment.

Set up

Create a new folder

chat-quickstartand go to the quickstart folder with the following command:mkdir chat-quickstart && cd chat-quickstartFor the recommended keyless authentication with Microsoft Entra ID, sign in to Azure with the following command:

az login

Retrieve resource information

You need to retrieve the following information to authenticate your application with your Azure OpenAI resource:

| Variable name | Value |

|---|---|

AZURE_OPENAI_ENDPOINT |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. |

AZURE_OPENAI_DEPLOYMENT_NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Model Deployments in the Azure portal. |

OPENAI_API_VERSION |

Learn more about API Versions. You can change the version in code or use an environment variable. |

Learn more about keyless authentication and setting environment variables.

Run the quickstart

The sample code in this quickstart uses Microsoft Entra ID for the recommended keyless authentication. If you prefer to use an API key, you can replace the NewDefaultAzureCredential implementation with NewKeyCredential.

azureOpenAIEndpoint := os.Getenv("AZURE_OPENAI_ENDPOINT")

credential, err := azidentity.NewDefaultAzureCredential(nil)

client, err := azopenai.NewClient(azureOpenAIEndpoint, credential, nil)

To run the sample:

Create a new file named chat_completions_keyless.go. Copy the following code into the chat_completions_keyless.go file.

package main import ( "context" "fmt" "log" "os" "github.com/Azure/azure-sdk-for-go/sdk/ai/azopenai" "github.com/Azure/azure-sdk-for-go/sdk/azidentity" ) func main() { azureOpenAIEndpoint := os.Getenv("AZURE_OPENAI_ENDPOINT") modelDeploymentID := "gpt-4o" maxTokens:= int32(400) credential, err := azidentity.NewDefaultAzureCredential(nil) if err != nil { log.Printf("ERROR: %s", err) return } client, err := azopenai.NewClient( azureOpenAIEndpoint, credential, nil) if err != nil { log.Printf("ERROR: %s", err) return } // This is a conversation in progress. // All messages, regardless of role, count against token usage for this API. messages := []azopenai.ChatRequestMessageClassification{ // System message sets the tone and rules of the conversation. &azopenai.ChatRequestSystemMessage{ Content: azopenai.NewChatRequestSystemMessageContent( "You are a helpful assistant."), }, // The user asks a question &azopenai.ChatRequestUserMessage{ Content: azopenai.NewChatRequestUserMessageContent( "Can I use honey as a substitute for sugar?"), }, // The reply would come back from the model. You // add it to the conversation so we can maintain context. &azopenai.ChatRequestAssistantMessage{ Content: azopenai.NewChatRequestAssistantMessageContent( "Yes, you can use use honey as a substitute for sugar."), }, // The user answers the question based on the latest reply. &azopenai.ChatRequestUserMessage{ Content: azopenai.NewChatRequestUserMessageContent( "What other ingredients can I use as a substitute for sugar?"), }, // From here you can keep iterating, sending responses back from the chat model. } gotReply := false resp, err := client.GetChatCompletions(context.TODO(), azopenai.ChatCompletionsOptions{ // This is a conversation in progress. // All messages count against token usage for this API. Messages: messages, DeploymentName: &modelDeploymentID, MaxTokens: &maxTokens, }, nil) if err != nil { // Implement application specific error handling logic. log.Printf("ERROR: %s", err) return } for _, choice := range resp.Choices { gotReply = true if choice.ContentFilterResults != nil { fmt.Fprintf(os.Stderr, "Content filter results\n") if choice.ContentFilterResults.Error != nil { fmt.Fprintf(os.Stderr, " Error:%v\n", choice.ContentFilterResults.Error) } fmt.Fprintf(os.Stderr, " Hate: sev: %v, filtered: %v\n", *choice.ContentFilterResults.Hate.Severity, *choice.ContentFilterResults.Hate.Filtered) fmt.Fprintf(os.Stderr, " SelfHarm: sev: %v, filtered: %v\n", *choice.ContentFilterResults.SelfHarm.Severity, *choice.ContentFilterResults.SelfHarm.Filtered) fmt.Fprintf(os.Stderr, " Sexual: sev: %v, filtered: %v\n", *choice.ContentFilterResults.Sexual.Severity, *choice.ContentFilterResults.Sexual.Filtered) fmt.Fprintf(os.Stderr, " Violence: sev: %v, filtered: %v\n", *choice.ContentFilterResults.Violence.Severity, *choice.ContentFilterResults.Violence.Filtered) } if choice.Message != nil && choice.Message.Content != nil { fmt.Fprintf(os.Stderr, "Content[%d]: %s\n", *choice.Index, *choice.Message.Content) } if choice.FinishReason != nil { // The conversation for this choice is complete. fmt.Fprintf(os.Stderr, "Finish reason[%d]: %s\n", *choice.Index, *choice.FinishReason) } } if gotReply { fmt.Fprintf(os.Stderr, "Received chat completions reply\n") } }Run the following command to create a new Go module:

go mod init chat_completions_keyless.goRun

go mod tidyto install the required dependencies:go mod tidyRun the following command to run the sample:

go run chat_completions_keyless.go

Output

The output of the sample code looks similar to the following:

Content filter results

Hate: sev: safe, filtered: false

SelfHarm: sev: safe, filtered: false

Sexual: sev: safe, filtered: false

Violence: sev: safe, filtered: false

Content[0]: There are many alternatives to sugar that you can use, depending on the type of recipe you’re making and your dietary needs or taste preferences. Here are some popular sugar substitutes:

---

### **Natural Sweeteners**

1. **Honey**

- Sweeter than sugar and adds moisture, with a distinct flavor.

- Substitution: Use ¾ cup honey for 1 cup sugar, and reduce the liquid in your recipe by 2 tablespoons. Lower the baking temperature by 25°F to prevent over-browning.

2. **Maple Syrup**

- Adds a rich, earthy sweetness with a hint of maple flavor.

- Substitution: Use ¾ cup syrup for 1 cup sugar. Reduce liquids by 3 tablespoons.

3. **Agave Nectar**

- Sweeter and milder than honey, it dissolves well in cold liquids.

- Substitution: Use ⅔ cup agave for 1 cup sugar. Reduce liquids in the recipe slightly.

4. **Molasses**

- A byproduct of sugar production with a robust, slightly bitter flavor.

- Substitution: Use 1 cup of molasses for 1 cup sugar. Reduce liquid by ¼ cup and consider combining it with other sweeteners due to its strong flavor.

5. **Coconut Sugar**

- Made from the sap of coconut palms, it has a rich, caramel-like flavor.

- Substitution: Use it in a 1:1 ratio for sugar.

6. **Date Sugar** (or Medjool Dates)

- Made from ground, dried dates, or blended into a puree, offering a rich, caramel taste.

- Substitution: Use 1:1 for sugar. Adjust liquid in recipes if needed.

---

### **Calorie-Free or Reduced-Calorie Sweeteners**

1. **Stevia**

- A natural sweetener derived from stevia leaves, hundreds of

Finish reason[0]: length

Received chat completions reply

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

For more examples, check out the Azure OpenAI Samples GitHub repository

Source code | Artifact (Maven) | Samples | Retrieval Augmented Generation (RAG) enterprise chat template | IntelliJ IDEA

Prerequisites

- An Azure subscription - Create one for free

- The current version of the Java Development Kit (JDK)

- The Gradle build tool, or another dependency manager.

- An Azure OpenAI Service resource with the

gpt-4model deployed. For more information about model deployment, see the resource deployment guide.

Microsoft Entra ID prerequisites

For the recommended keyless authentication with Microsoft Entra ID, you need to:

- Install the Azure CLI used for keyless authentication with Microsoft Entra ID.

- Assign the

Cognitive Services Userrole to your user account. You can assign roles in the Azure portal under Access control (IAM) > Add role assignment.

Set up

Create a new folder

chat-quickstartand go to the quickstart folder with the following command:mkdir chat-quickstart && cd chat-quickstartInstall Apache Maven. Then run

mvn -vto confirm successful installation.Create a new

pom.xmlfile in the root of your project, and copy the following code into it:<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.azure.samples</groupId> <artifactId>quickstart-dall-e</artifactId> <version>1.0.0-SNAPSHOT</version> <build> <sourceDirectory>src</sourceDirectory> <plugins> <plugin> <artifactId>maven-compiler-plugin</artifactId> <version>3.7.0</version> <configuration> <source>1.8</source> <target>1.8</target> </configuration> </plugin> </plugins> </build> <dependencies> <dependency> <groupId>com.azure</groupId> <artifactId>azure-ai-openai</artifactId> <version>1.0.0-beta.10</version> </dependency> <dependency> <groupId>com.azure</groupId> <artifactId>azure-core</artifactId> <version>1.53.0</version> </dependency> <dependency> <groupId>com.azure</groupId> <artifactId>azure-identity</artifactId> <version>1.15.1</version> </dependency> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-simple</artifactId> <version>1.7.9</version> </dependency> </dependencies> </project>Install the Azure OpenAI SDK and dependencies.

mvn clean dependency:copy-dependenciesFor the recommended keyless authentication with Microsoft Entra ID, sign in to Azure with the following command:

az login

Retrieve resource information

You need to retrieve the following information to authenticate your application with your Azure OpenAI resource:

| Variable name | Value |

|---|---|

AZURE_OPENAI_ENDPOINT |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. |

AZURE_OPENAI_DEPLOYMENT_NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Model Deployments in the Azure portal. |

OPENAI_API_VERSION |

Learn more about API Versions. You can change the version in code or use an environment variable. |

Learn more about keyless authentication and setting environment variables.

Run the app

The sample code in this quickstart uses Microsoft Entra ID for the recommended keyless authentication. If you prefer to use an API key, you can replace the DefaultAzureCredential object with an AzureKeyCredential object.

OpenAIClient client = new OpenAIClientBuilder()

.endpoint(endpoint)

.credential(new DefaultAzureCredentialBuilder().build())

.buildAsyncClient();

Follow these steps to create a console application for speech recognition.

Create a new file named Quickstart.java in the same project root directory.

Copy the following code into Quickstart.java:

import com.azure.ai.openai.OpenAIClient; import com.azure.ai.openai.OpenAIClientBuilder; import com.azure.ai.openai.models.ChatChoice; import com.azure.ai.openai.models.ChatCompletions; import com.azure.ai.openai.models.ChatCompletionsOptions; import com.azure.ai.openai.models.ChatRequestAssistantMessage; import com.azure.ai.openai.models.ChatRequestMessage; import com.azure.ai.openai.models.ChatRequestSystemMessage; import com.azure.ai.openai.models.ChatRequestUserMessage; import com.azure.ai.openai.models.ChatResponseMessage; import com.azure.ai.openai.models.CompletionsUsage; import com.azure.identity.DefaultAzureCredentialBuilder; import com.azure.core.util.Configuration; import java.util.ArrayList; import java.util.List; public class QuickstartEntra { public static void main(String[] args) { String endpoint = Configuration.getGlobalConfiguration().get("AZURE_OPENAI_ENDPOINT"); String deploymentOrModelId = "gpt-4o"; // Use the recommended keyless credential instead of the AzureKeyCredential credential. OpenAIClient client = new OpenAIClientBuilder() .endpoint(endpoint) .credential(new DefaultAzureCredentialBuilder().build()) .buildClient(); List<ChatRequestMessage> chatMessages = new ArrayList<>(); chatMessages.add(new ChatRequestSystemMessage("You are a helpful assistant.")); chatMessages.add(new ChatRequestUserMessage("Can I use honey as a substitute for sugar?")); chatMessages.add(new ChatRequestAssistantMessage("Yes, you can use use honey as a substitute for sugar.")); chatMessages.add(new ChatRequestUserMessage("What other ingredients can I use as a substitute for sugar?")); ChatCompletions chatCompletions = client.getChatCompletions(deploymentOrModelId, new ChatCompletionsOptions(chatMessages)); System.out.printf("Model ID=%s is created at %s.%n", chatCompletions.getId(), chatCompletions.getCreatedAt()); for (ChatChoice choice : chatCompletions.getChoices()) { ChatResponseMessage message = choice.getMessage(); System.out.printf("Index: %d, Chat Role: %s.%n", choice.getIndex(), message.getRole()); System.out.println("Message:"); System.out.println(message.getContent()); } System.out.println(); CompletionsUsage usage = chatCompletions.getUsage(); System.out.printf("Usage: number of prompt token is %d, " + "number of completion token is %d, and number of total tokens in request and response is %d.%n", usage.getPromptTokens(), usage.getCompletionTokens(), usage.getTotalTokens()); } }Run your new console application to generate an image:

javac Quickstart.java -cp ".;target\dependency\*" java -cp ".;target\dependency\*" Quickstart

Output

Model ID=chatcmpl-BDgC0Yr8YNhZFhLABQYfx6QfERsVO is created at 2025-03-21T23:35:52Z.

Index: 0, Chat Role: assistant.

Message:

If you're looking to replace sugar in cooking, baking, or beverages, there are several alternatives you can use depending on your tastes, dietary needs, and the recipe. Here's a list of common sugar substitutes:

### **Natural Sweeteners**

1. **Honey**

- Sweeter than sugar, so you may need less.

- Adds moisture to recipes.

- Adjust liquids and cooking temperature when baking to avoid over-browning.

2. **Maple Syrup**

- Provides a rich, complex flavor.

- Can be used in baking, beverages, and sauces.

- Reduce the liquid content slightly in recipes.

3. **Agave Syrup**

- Sweeter than sugar and has a mild flavor.

- Works well in drinks, smoothies, and desserts.

- Contains fructose, so use sparingly.

4. **Date Sugar or Date Paste**

- Made from dates, it's a whole-food sweetener with fiber and nutrients.

- Great for baked goods and smoothies.

- May darken recipes due to its color.

5. **Coconut Sugar**

- Similar in taste and texture to brown sugar.

- Less refined than white sugar.

- Slightly lower glycemic index, but still contains calories.

6. **Molasses**

- Dark, syrupy byproduct of sugar refining.

- Strong flavor; best for specific recipes like gingerbread or BBQ sauce.

### **Artificial Sweeteners**

1. **Stevia**

- Extracted from the leaves of the stevia plant.

- Virtually calorie-free and much sweeter than sugar.

- Available as liquid, powder, or granulated.

2. **Erythritol**

- A sugar alcohol with few calories and a clean, sweet taste.

- Doesn?t caramelize like sugar.

- Often blended with other sweeteners.

3. **Xylitol**

- A sugar alcohol similar to erythritol.

- Commonly used in baking and beverages.

- Toxic to pets (especially dogs), so handle carefully.

### **Whole Fruits**

1. **Mashed Bananas**

- Natural sweetness works well in baking.

- Adds moisture to recipes.

- Can replace sugar partially or fully depending on the dish.

2. **Applesauce (Unsweetened)**

- Adds sweetness and moisture to baked goods.

- Reduce other liquids in the recipe accordingly.

3. **Pureed Dates, Figs, or Prunes**

- Dense sweetness with added fiber and nutrients.

- Ideal for energy bars, smoothies, and baking.

### **Other Options**

1. **Brown Rice Syrup**

- Less sweet than sugar, with a mild flavor.

- Good for granola bars and baked goods.

2. **Yacon Syrup**

- Extracted from the root of the yacon plant.

- Sweet and rich in prebiotics.

- Best for raw recipes.

3. **Monk Fruit Sweetener**

- Natural sweetener derived from monk fruit.

- Often mixed with erythritol for easier use.

- Provides sweetness without calories.

### **Tips for Substitution**

- Sweeteners vary in sweetness, texture, and liquid content, so adjust recipes accordingly.

- When baking, reducing liquids or fats slightly may be necessary.

- Taste test when possible to ensure the sweetness level matches your preference.

Whether you're seeking healthier options, low-calorie substitutes, or simply alternatives for flavor, these sugar substitutes can work for a wide range of recipes!

Usage: number of prompt token is 60, number of completion token is 740, and number of total tokens in request and response is 800.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- Get started with the chat using your own data sample for Java

- For more examples, check out the Azure OpenAI Samples GitHub repository

Source code | Artifacts (Maven) | Sample

Prerequisites

- An Azure subscription - Create one for free

- The current version of the Java Development Kit (JDK)

- The Spring Boot CLI tool

- An Azure OpenAI Service resource with the

gpt-4model deployed. For more information about model deployment, see the resource deployment guide. This example assumes that your deployment name matches the model namegpt-4

Set up

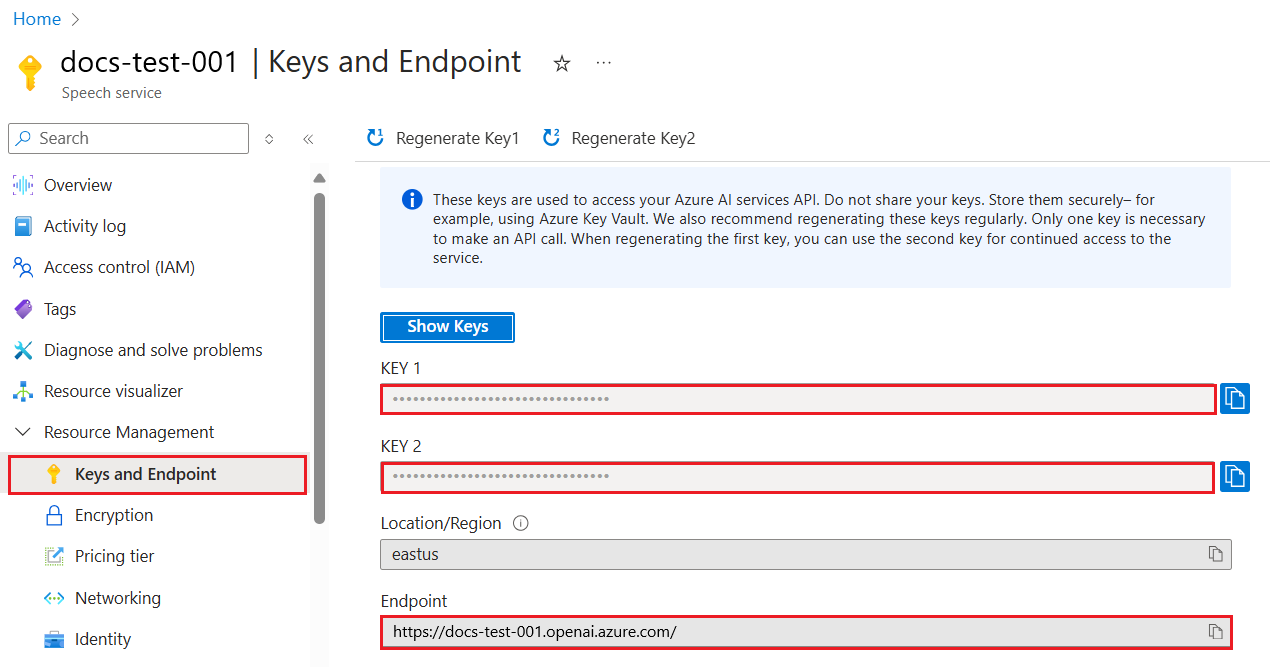

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

The service endpoint can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the endpoint via the Deployments page in Azure AI Foundry portal. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

Important

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If you use an API key, store it securely in Azure Key Vault. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

Note

Spring AI defaults the model name to gpt-35-turbo. It's only necessary to provide the SPRING_AI_AZURE_OPENAI_MODEL value if you've deployed a model with a different name.

export SPRING_AI_AZURE_OPENAI_API_KEY="REPLACE_WITH_YOUR_KEY_VALUE_HERE"

export SPRING_AI_AZURE_OPENAI_ENDPOINT="REPLACE_WITH_YOUR_ENDPOINT_HERE"

export SPRING_AI_AZURE_OPENAI_MODEL="REPLACE_WITH_YOUR_MODEL_NAME_HERE"

Create a new Spring application

Create a new Spring project.

In a Bash window, create a new directory for your app, and navigate to it.

mkdir ai-chat-demo && cd ai-chat-demo

Run the spring init command from your working directory. This command creates a standard directory structure for your Spring project including the main Java class source file and the pom.xml file used for managing Maven based projects.

spring init -a ai-chat-demo -n AIChat --force --build maven -x

The generated files and folders resemble the following structure:

ai-chat-demo/

|-- pom.xml

|-- mvn

|-- mvn.cmd

|-- HELP.md

|-- src/

|-- main/

| |-- resources/

| | |-- application.properties

| |-- java/

| |-- com/

| |-- example/

| |-- aichatdemo/

| |-- AiChatApplication.java

|-- test/

|-- java/

|-- com/

|-- example/

|-- aichatdemo/

|-- AiChatApplicationTests.java

Edit Spring application

Edit the pom.xml file.

From the root of the project directory, open the pom.xml file in your preferred editor or IDE and overwrite the file with the following content:

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>3.2.0</version> <relativePath/> <!-- lookup parent from repository --> </parent> <groupId>com.example</groupId> <artifactId>ai-chat-demo</artifactId> <version>0.0.1-SNAPSHOT</version> <name>AIChat</name> <description>Demo project for Spring Boot</description> <properties> <java.version>17</java.version> </properties> <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter</artifactId> </dependency> <dependency> <groupId>org.springframework.experimental.ai</groupId> <artifactId>spring-ai-azure-openai-spring-boot-starter</artifactId> <version>0.7.0-SNAPSHOT</version> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-test</artifactId> <scope>test</scope> </dependency> </dependencies> <build> <plugins> <plugin> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-maven-plugin</artifactId> </plugin> </plugins> </build> <repositories> <repository> <id>spring-snapshots</id> <name>Spring Snapshots</name> <url>https://repo.spring.io/snapshot</url> <releases> <enabled>false</enabled> </releases> </repository> </repositories> </project>From the src/main/java/com/example/aichatdemo folder, open AiChatApplication.java in your preferred editor or IDE and paste in the following code:

package com.example.aichatdemo; import java.util.ArrayList; import java.util.List; import org.springframework.ai.client.AiClient; import org.springframework.ai.prompt.Prompt; import org.springframework.ai.prompt.messages.ChatMessage; import org.springframework.ai.prompt.messages.Message; import org.springframework.ai.prompt.messages.MessageType; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.boot.CommandLineRunner; import org.springframework.boot.SpringApplication; import org.springframework.boot.autoconfigure.SpringBootApplication; @SpringBootApplication public class AiChatApplication implements CommandLineRunner { private static final String ROLE_INFO_KEY = "role"; @Autowired private AiClient aiClient; public static void main(String[] args) { SpringApplication.run(AiChatApplication.class, args); } @Override public void run(String... args) throws Exception { System.out.println(String.format("Sending chat prompts to AI service. One moment please...\r\n")); final List<Message> msgs = new ArrayList<>(); msgs.add(new ChatMessage(MessageType.SYSTEM, "You are a helpful assistant")); msgs.add(new ChatMessage(MessageType.USER, "Does Azure OpenAI support customer managed keys?")); msgs.add(new ChatMessage(MessageType.ASSISTANT, "Yes, customer managed keys are supported by Azure OpenAI?")); msgs.add(new ChatMessage(MessageType.USER, "Do other Azure AI services support this too?")); final var resps = aiClient.generate(new Prompt(msgs)); System.out.println(String.format("Prompt created %d generated response(s).", resps.getGenerations().size())); resps.getGenerations().stream() .forEach(gen -> { final var role = gen.getInfo().getOrDefault(ROLE_INFO_KEY, MessageType.ASSISTANT.getValue()); System.out.println(String.format("Generated respose from \"%s\": %s", role, gen.getText())); }); } }Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Navigate back to the project root folder, and run the app by using the following command:

./mvnw spring-boot:run

Output

. ____ _ __ _ _

/\\ / ___'_ __ _ _(_)_ __ __ _ \ \ \ \

( ( )\___ | '_ | '_| | '_ \/ _` | \ \ \ \

\\/ ___)| |_)| | | | | || (_| | ) ) ) )

' |____| .__|_| |_|_| |_\__, | / / / /

=========|_|==============|___/=/_/_/_/

:: Spring Boot :: (v3.1.5)

2023-11-07T13:31:10.884-06:00 INFO 6248 --- [ main] c.example.aichatdemo.AiChatApplication : No active profile set, falling back to 1 default profile: "default"

2023-11-07T13:31:11.595-06:00 INFO 6248 --- [ main] c.example.aichatdemo.AiChatApplication : Started AiChatApplication in 0.994 seconds (process running for 1.28)

Sending chat prompts to AI service. One moment please...

Prompt created 1 generated response(s).

Generated respose from "assistant": Yes, other Azure AI services also support customer managed keys. Azure AI Services, Azure Machine Learning, and other AI services in Azure provide options for customers to manage and control their encryption keys. This allows customers to have greater control over their data and security.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

For more examples, check out the Azure OpenAI Samples GitHub repository

Source code | Package (npm) | Samples

Note

This guide uses the latest OpenAI npm package which now fully supports Azure OpenAI. If you're looking for code examples for the legacy Azure OpenAI JavaScript SDK, they're currently still available in this repo.

Prerequisites

- An Azure subscription - Create one for free

- LTS versions of Node.js

- Azure CLI used for passwordless authentication in a local development environment, create the necessary context by signing in with the Azure CLI.

- An Azure OpenAI Service resource with a

gpt-4series model deployed. For more information about model deployment, see the resource deployment guide.

Microsoft Entra ID prerequisites

For the recommended keyless authentication with Microsoft Entra ID, you need to:

- Install the Azure CLI used for keyless authentication with Microsoft Entra ID.

- Assign the

Cognitive Services Userrole to your user account. You can assign roles in the Azure portal under Access control (IAM) > Add role assignment.

Set up

Create a new folder

chat-quickstartand go to the quickstart folder with the following command:mkdir chat-quickstart && cd chat-quickstartCreate the

package.jsonwith the following command:npm init -yInstall the OpenAI client library for JavaScript with:

npm install openaiFor the recommended passwordless authentication:

npm install @azure/identity

Retrieve resource information

You need to retrieve the following information to authenticate your application with your Azure OpenAI resource:

| Variable name | Value |

|---|---|

AZURE_OPENAI_ENDPOINT |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. |

AZURE_OPENAI_DEPLOYMENT_NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Model Deployments in the Azure portal. |

OPENAI_API_VERSION |

Learn more about API Versions. You can change the version in code or use an environment variable. |

Learn more about keyless authentication and setting environment variables.

Caution

To use the recommended keyless authentication with the SDK, make sure that the AZURE_OPENAI_API_KEY environment variable isn't set.

Create a sample application

Create the

index.jsfile with the following code:const { AzureOpenAI } = require("openai"); const { DefaultAzureCredential, getBearerTokenProvider } = require("@azure/identity"); // You will need to set these environment variables or edit the following values const endpoint = process.env.AZURE_OPENAI_ENDPOINT || "Your endpoint"; const apiVersion = process.env.OPENAI_API_VERSION || "2024-05-01-preview"; const deployment = process.env.AZURE_OPENAI_DEPLOYMENT_NAME || "gpt-4o"; //This must match your deployment name. // keyless authentication const credential = new DefaultAzureCredential(); const scope = "https://cognitiveservices.azure.com/.default"; const azureADTokenProvider = getBearerTokenProvider(credential, scope); async function main() { const client = new AzureOpenAI({ endpoint, apiKey, azureADTokenProvider, deployment }); const result = await client.chat.completions.create({ messages: [ { role: "system", content: "You are a helpful assistant." }, { role: "user", content: "Does Azure OpenAI support customer managed keys?" }, { role: "assistant", content: "Yes, customer managed keys are supported by Azure OpenAI?" }, { role: "user", content: "Do other Azure AI services support this too?" }, ], model: "", }); for (const choice of result.choices) { console.log(choice.message); } } main().catch((err) => { console.error("The sample encountered an error:", err); }); module.exports = { main };Sign in to Azure with the following command:

az loginRun the JavaScript file.

node index.js

Output

== Chat Completions Sample ==

{

content: 'Yes, several other Azure AI services also support customer managed keys for enhanced security and control over encryption keys.',

role: 'assistant'

}

Note

If your receive the error: Error occurred: OpenAIError: The apiKey and azureADTokenProvider arguments are mutually exclusive; only one can be passed at a time. You might need to remove a preexisting environment variable for the API key from your system. Even though the Microsoft Entra ID code sample isn't explicitly referencing the API key environment variable, if one is present on the system executing this sample, this error is still generated.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- Azure OpenAI Overview

- Get started with the chat using your own data sample for JavaScript

- For more examples, check out the Azure OpenAI Samples GitHub repository

Source code | Package (npm) | Samples

Note

This guide uses the latest OpenAI npm package which now fully supports Azure OpenAI. If you're looking for code examples for the legacy Azure OpenAI JavaScript SDK, they're currently still available in this repo.

Prerequisites

- An Azure subscription - Create one for free

- LTS versions of Node.js

- TypeScript

- Azure CLI used for passwordless authentication in a local development environment, create the necessary context by signing in with the Azure CLI.

- An Azure OpenAI Service resource with a

gpt-4series model deployed. For more information about model deployment, see the resource deployment guide.

Microsoft Entra ID prerequisites

For the recommended keyless authentication with Microsoft Entra ID, you need to:

- Install the Azure CLI used for keyless authentication with Microsoft Entra ID.

- Assign the

Cognitive Services Userrole to your user account. You can assign roles in the Azure portal under Access control (IAM) > Add role assignment.

Set up

Create a new folder

chat-quickstartand go to the quickstart folder with the following command:mkdir chat-quickstart && cd chat-quickstartCreate the

package.jsonwith the following command:npm init -yUpdate the

package.jsonto ECMAScript with the following command:npm pkg set type=moduleInstall the OpenAI client library for JavaScript with:

npm install openaiFor the recommended passwordless authentication:

npm install @azure/identity

Retrieve resource information

You need to retrieve the following information to authenticate your application with your Azure OpenAI resource:

| Variable name | Value |

|---|---|

AZURE_OPENAI_ENDPOINT |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. |

AZURE_OPENAI_DEPLOYMENT_NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Model Deployments in the Azure portal. |

OPENAI_API_VERSION |

Learn more about API Versions. You can change the version in code or use an environment variable. |

Learn more about keyless authentication and setting environment variables.

Caution

To use the recommended keyless authentication with the SDK, make sure that the AZURE_OPENAI_API_KEY environment variable isn't set.

Create a sample application

Create the

index.tsfile with the following code:import { AzureOpenAI } from "openai"; import { DefaultAzureCredential, getBearerTokenProvider } from "@azure/identity"; import type { ChatCompletion, ChatCompletionCreateParamsNonStreaming, } from "openai/resources/index"; // You will need to set these environment variables or edit the following values const endpoint = process.env.AZURE_OPENAI_ENDPOINT || "Your endpoint"; // Required Azure OpenAI deployment name and API version const apiVersion = process.env.OPENAI_API_VERSION || "2024-08-01-preview"; const deploymentName = process.env.AZURE_OPENAI_DEPLOYMENT_NAME || "gpt-4o-mini"; //This must match your deployment name. // keyless authentication const credential = new DefaultAzureCredential(); const scope = "https://cognitiveservices.azure.com/.default"; const azureADTokenProvider = getBearerTokenProvider(credential, scope); function getClient(): AzureOpenAI { return new AzureOpenAI({ endpoint, azureADTokenProvider, apiVersion, deployment: deploymentName, }); } function createMessages(): ChatCompletionCreateParamsNonStreaming { return { messages: [ { role: "system", content: "You are a helpful assistant." }, { role: "user", content: "Does Azure OpenAI support customer managed keys?", }, { role: "assistant", content: "Yes, customer managed keys are supported by Azure OpenAI?", }, { role: "user", content: "Do other Azure AI services support this too?" }, ], model: "", }; } async function printChoices(completion: ChatCompletion): Promise<void> { for (const choice of completion.choices) { console.log(choice.message); } } export async function main() { const client = getClient(); const messages = createMessages(); const result = await client.chat.completions.create(messages); await printChoices(result); } main().catch((err) => { console.error("The sample encountered an error:", err); });Create the

tsconfig.jsonfile to transpile the TypeScript code and copy the following code for ECMAScript.{ "compilerOptions": { "module": "NodeNext", "target": "ES2022", // Supports top-level await "moduleResolution": "NodeNext", "skipLibCheck": true, // Avoid type errors from node_modules "strict": true // Enable strict type-checking options }, "include": ["*.ts"] }Transpile from TypeScript to JavaScript.

tscRun the code with the following command:

node index.js

Output

== Chat Completions Sample ==

{

content: 'Yes, several other Azure AI services also support customer managed keys for enhanced security and control over encryption keys.',

role: 'assistant'

}

Note

If your receive the error: Error occurred: OpenAIError: The apiKey and azureADTokenProvider arguments are mutually exclusive; only one can be passed at a time. You might need to remove a preexisting environment variable for the API key from your system. Even though the Microsoft Entra ID code sample isn't explicitly referencing the API key environment variable, if one is present on the system executing this sample, this error is still generated.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- Azure OpenAI Overview

- For more examples, check out the Azure OpenAI Samples GitHub repository

Library source code | Package (PyPi) | Retrieval Augmented Generation (RAG) enterprise chat template |

Prerequisites

- An Azure subscription - Create one for free

- Python 3.8 or later version.

- The following Python libraries: os.

- An Azure OpenAI Service resource with either the

gpt-35-turboor thegpt-4models deployed. For more information about model deployment, see the resource deployment guide.

Set up

Install the OpenAI Python client library with:

pip install openai

Note

This library is maintained by OpenAI. Refer to the release history to track the latest updates to the library.

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

The service endpoint can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the endpoint via the Deployments page in Azure AI Foundry portal. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

Important

We recommend Microsoft Entra ID authentication with managed identities for Azure resources to avoid storing credentials with your applications that run in the cloud.

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If using API keys, store them securely in Azure Key Vault, rotate the keys regularly, and restrict access to Azure Key Vault using role based access control and network access restrictions. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

Create a new Python application

Create a new Python file called quickstart.py. Then open it up in your preferred editor or IDE.

Replace the contents of quickstart.py with the following code.

You need to set the model variable to the deployment name you chose when you deployed the GPT-3.5-Turbo or GPT-4 models. Entering the model name will result in an error unless you chose a deployment name that is identical to the underlying model name.

import os

from openai import AzureOpenAI

client = AzureOpenAI(

azure_endpoint = os.getenv("AZURE_OPENAI_ENDPOINT"),

api_key=os.getenv("AZURE_OPENAI_API_KEY"),

api_version="2024-02-01"

)

response = client.chat.completions.create(

model="gpt-35-turbo", # model = "deployment_name".

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Does Azure OpenAI support customer managed keys?"},

{"role": "assistant", "content": "Yes, customer managed keys are supported by Azure OpenAI."},

{"role": "user", "content": "Do other Azure AI services support this too?"}

]

)

print(response.choices[0].message.content)

Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Run the application with the

pythoncommand on your quickstart file:python quickstart.py

Output

{

"choices": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"content": "Yes, most of the Azure AI services support customer managed keys. However, not all services support it. You can check the documentation of each service to confirm if customer managed keys are supported.",

"role": "assistant"

}

}

],

"created": 1679001781,

"id": "chatcmpl-6upLpNYYOx2AhoOYxl9UgJvF4aPpR",

"model": "gpt-3.5-turbo-0301",

"object": "chat.completion",

"usage": {

"completion_tokens": 39,

"prompt_tokens": 58,

"total_tokens": 97

}

}

Yes, most of the Azure AI services support customer managed keys. However, not all services support it. You can check the documentation of each service to confirm if customer managed keys are supported.

Understanding the message structure

The GPT-35-Turbo and GPT-4 models are optimized to work with inputs formatted as a conversation. The messages variable passes an array of dictionaries with different roles in the conversation delineated by system, user, and assistant. The system message can be used to prime the model by including context or instructions on how the model should respond.

The GPT-35-Turbo & GPT-4 how-to guide provides an in-depth introduction into the options for communicating with these new models.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- Learn more about how to work with GPT-35-Turbo and the GPT-4 models with our how-to guide.

- Get started with the chat using your own data sample for Python

- For more examples, check out the Azure OpenAI Samples GitHub repository

Prerequisites

- An Azure subscription - Create one for free.

- An Azure OpenAI Service resource with either the

gpt-35-turboor thegpt-4models deployed. For more information about model deployment, see the resource deployment guide.

Set up

Retrieve key and endpoint

To successfully make a call against Azure OpenAI, you need an endpoint and a key.

| Variable name | Value |

|---|---|

ENDPOINT |

The service endpoint can be found in the Keys & Endpoint section when examining your resource from the Azure portal. Alternatively, you can find the endpoint via the Deployments page in Azure AI Foundry portal. An example endpoint is: https://docs-test-001.openai.azure.com/. |

API-KEY |

This value can be found in the Keys & Endpoint section when examining your resource from the Azure portal. You can use either KEY1 or KEY2. |

Go to your resource in the Azure portal. The Keys & Endpoint section can be found in the Resource Management section. Copy your endpoint and access key as you'll need both for authenticating your API calls. You can use either KEY1 or KEY2. Always having two keys allows you to securely rotate and regenerate keys without causing a service disruption.

Environment variables

Create and assign persistent environment variables for your key and endpoint.

Important

We recommend Microsoft Entra ID authentication with managed identities for Azure resources to avoid storing credentials with your applications that run in the cloud.

Use API keys with caution. Don't include the API key directly in your code, and never post it publicly. If using API keys, store them securely in Azure Key Vault, rotate the keys regularly, and restrict access to Azure Key Vault using role based access control and network access restrictions. For more information about using API keys securely in your apps, see API keys with Azure Key Vault.

For more information about AI services security, see Authenticate requests to Azure AI services.

setx AZURE_OPENAI_API_KEY "REPLACE_WITH_YOUR_KEY_VALUE_HERE"

setx AZURE_OPENAI_ENDPOINT "REPLACE_WITH_YOUR_ENDPOINT_HERE"

REST API

In a bash shell, run the following command. You will need to replace gpt-35-turbo with the deployment name you chose when you deployed the GPT-35-Turbo or GPT-4 models. Entering the model name will result in an error unless you chose a deployment name that is identical to the underlying model name.

curl $AZURE_OPENAI_ENDPOINT/openai/deployments/gpt-35-turbo/chat/completions?api-version=2024-02-01 \

-H "Content-Type: application/json" \

-H "api-key: $AZURE_OPENAI_API_KEY" \

-d '{"messages":[{"role": "system", "content": "You are a helpful assistant."},{"role": "user", "content": "Does Azure OpenAI support customer managed keys?"},{"role": "assistant", "content": "Yes, customer managed keys are supported by Azure OpenAI."},{"role": "user", "content": "Do other Azure AI services support this too?"}]}'

The format of your first line of the command with an example endpoint would appear as follows curl https://docs-test-001.openai.azure.com/openai/deployments/{YOUR-DEPLOYMENT_NAME_HERE}/chat/completions?api-version=2024-02-01 \ If you encounter an error double check to make sure that you don't have a doubling of the / at the separation between your endpoint and /openai/deployments.

If you want to run this command in a normal Windows command prompt you would need to alter the text to remove the \ and line breaks.

Important

For production, use a secure way of storing and accessing your credentials like Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Output

{"id":"chatcmpl-6v7mkQj980V1yBec6ETrKPRqFjNw9",

"object":"chat.completion","created":1679072642,

"model":"gpt-35-turbo",

"usage":{"prompt_tokens":58,

"completion_tokens":68,

"total_tokens":126},

"choices":[{"message":{"role":"assistant",

"content":"Yes, other Azure AI services also support customer managed keys. Azure AI services offer multiple options for customers to manage keys, such as using Azure Key Vault, customer-managed keys in Azure Key Vault or customer-managed keys through Azure Storage service. This helps customers ensure that their data is secure and access to their services is controlled."},"finish_reason":"stop","index":0}]}

Output formatting adjusted for ease of reading, actual output is a single block of text without line breaks.

Understanding the message structure

The GPT-35-Turbo and GPT-4 models are optimized to work with inputs formatted as a conversation. The messages variable passes an array of dictionaries with different roles in the conversation delineated by system, user, and assistant. The system message can be used to prime the model by including context or instructions on how the model should respond.

The GPT-35-Turbo & GPT-4 how-to guide provides an in-depth introduction into the options for communicating with these new models.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- Learn more about how to work with GPT-35-Turbo and the GPT-4 models with our how-to guide.

- For more examples, check out the Azure OpenAI Samples GitHub repository

Prerequisites

- An Azure subscription - Create one for free

- You can use either the latest version, PowerShell 7, or Windows PowerShell 5.1.

- An Azure OpenAI Service resource with a model deployed. For more information about model deployment, see the resource deployment guide.

- An Azure OpenAI Service resource with the

gpt-4omodel deployed. For more information about model deployment, see the resource deployment guide.

Microsoft Entra ID prerequisites

For the recommended keyless authentication with Microsoft Entra ID, you need to:

- Install the Azure CLI used for keyless authentication with Microsoft Entra ID.

- Assign the

Cognitive Services Userrole to your user account. You can assign roles in the Azure portal under Access control (IAM) > Add role assignment.

Retrieve resource information

You need to retrieve the following information to authenticate your application with your Azure OpenAI resource:

| Variable name | Value |

|---|---|

AZURE_OPENAI_ENDPOINT |

This value can be found in the Keys and Endpoint section when examining your resource from the Azure portal. |

AZURE_OPENAI_DEPLOYMENT_NAME |

This value will correspond to the custom name you chose for your deployment when you deployed a model. This value can be found under Resource Management > Model Deployments in the Azure portal. |

OPENAI_API_VERSION |

Learn more about API Versions. You can change the version in code or use an environment variable. |

Learn more about keyless authentication and setting environment variables.

Create a new PowerShell script

For the recommended keyless authentication with Microsoft Entra ID, sign in to Azure with the following command:

az loginCreate a new PowerShell file called quickstart.ps1. Then open it up in your preferred editor or IDE.

Replace the contents of quickstart.ps1 with the following code. You need to set the

enginevariable to the deployment name you chose when you deployed the GPT-4o model. Entering the model name results in an error unless you chose a deployment name that is identical to the underlying model name.# Azure OpenAI metadata variables $openai = @{ api_base = $Env:AZURE_OPENAI_ENDPOINT api_version = '2024-10-21' # This can change in the future. name = 'gpt-4o' # The name you chose for your model deployment. } # Use the recommended keyless authentication via bearer token. $headers = [ordered]@{ #'api-key' = $Env:AZURE_OPENAI_API_KEY 'Authorization' = "Bearer $($Env:DEFAULT_AZURE_CREDENTIAL_TOKEN)" } # Completion text $messages = @() $messages += @{ role = 'system' content = 'You are a helpful assistant.' } $messages += @{ role = 'user' content = 'Can I use honey as a substitute for sugar?' } $messages += @{ role = 'assistant' content = 'Yes, you can use use honey as a substitute for sugar.' } $messages += @{ role = 'user' content = 'What other ingredients can I use as a substitute for sugar?' } # Adjust these values to fine-tune completions $body = [ordered]@{ messages = $messages } | ConvertTo-Json # Send a request to generate an answer $url = "$($openai.api_base)/openai/deployments/$($openai.name)/chat/completions?api-version=$($openai.api_version)" $response = Invoke-RestMethod -Uri $url -Headers $headers -Body $body -Method Post -ContentType 'application/json' return $responseImportant

For production, use a secure way of storing and accessing your credentials like The PowerShell Secret Management with Azure Key Vault. For more information about credential security, see the Azure AI services security article.

Run the script using PowerShell. In this example, we're using the

-Depthparameter to ensure that the output isn't truncated../quickstart.ps1 | ConvertTo-Json -Depth 4

Output

The output of the script is a JSON object that contains the response from the Azure OpenAI Service. The output looks similar to the following:

{

"choices": [

{

"content_filter_results": {

"custom_blocklists": {

"filtered": false

},

"hate": {

"filtered": false,

"severity": "safe"

},

"protected_material_code": {

"filtered": false,

"detected": false

},

"protected_material_text": {

"filtered": false,

"detected": false

},

"self_harm": {

"filtered": false,

"severity": "safe"

},

"sexual": {

"filtered": false,

"severity": "safe"

},

"violence": {

"filtered": false,

"severity": "safe"

}

},

"finish_reason": "stop",

"index": 0,

"logprobs": null,

"message": {

"content": "There are many alternatives to sugar that can be used in cooking and baking, depending on your dietary needs, taste preferences, and the type of recipe you're making. Here are some popular sugar substitutes:\n\n---\n\n### 1. **Natural Sweeteners**\n - **Maple Syrup**: A natural sweetener with a rich, distinct flavor. Use about ¾ cup of maple syrup for every cup of sugar, and reduce the liquid in the recipe slightly.\n - **Agave Nectar**: A liquid sweetener that’s sweeter than sugar. Use about ⅔ cup of agave nectar for each cup of sugar, and reduce the liquid in the recipe.\n - **Coconut Sugar**: Made from the sap of the coconut palm, it has a mild caramel flavor. Substitute in a 1:1 ratio for sugar.\n - **Molasses**: A by-product of sugar production, molasses is rich in flavor and best for recipes like gingerbread or barbecue sauce. Adjust quantities based on the recipe.\n - **Stevia (Natural)**: Derived from the stevia plant, it's intensely sweet and available in liquid or powder form. Use sparingly, as a little goes a long way.\n\n---\n\n### 2. **Fruit-Based Sweeteners**\n - **Ripe Bananas**: Mashed bananas work well for baking recipes like muffins or pancakes. Use about ½ cup of mashed banana for every cup of sugar and reduce the liquid slightly.\n - **Applesauce**: Unsweetened applesauce adds sweetness and moisture to baked goods. Replace sugar in a 1:1 ratio, but reduce the liquid by ¼ cup.\n - **Dates/Date Paste**: Blend dates with water to make a paste, which works well in recipes like energy bars, cakes, or smoothies. Use in a 1:1 ratio for sugar.\n - **Fruit Juices (e.g., orange juice)**: Can be used to impart natural sweetness but is best suited for specific recipes like marinades or desserts.\n\n---\n\n### 3. **Artificial and Low-Calorie Sweeteners**\n - **Erythritol**: A sugar alcohol with no calories. Substitute in equal amounts, but be careful as it may cause a cooling sensation in some recipes.\n - **Xylitol**: Another sugar alcohol, often used in gum and candies. It’s a 1:1 sugar substitute but may affect digestion if consumed in large quantities.\n - **Monk Fruit Sweetener**: A natural, calorie-free sweetener that’s significantly sweeter than sugar. Follow the product packaging for exact substitution measurements.\n - **Aspartame, Sucralose, or Saccharin** (Artificial Sweeteners): Often used for calorie reduction in beverages or desserts. Follow package instructions for substitution.\n\n---\n\n### 4. **Other Natural Alternatives**\n - **Brown Rice Syrup**: A sticky, malt-flavored syrup used in granolas or desserts. Substitute 1 ¼ cups of brown rice syrup for every cup of sugar.\n - **Barley Malt Syrup**: A thick, dark syrup with a distinct flavor. It can replace sugar but might require recipe adjustments due to its strong taste.\n - **Yacon Syrup**: Made from the root of the yacon plant, it’s similar in texture to molasses and has a mild sweetness.\n\n---\n\n### General Tips for Substituting Sugar:\n- **Adjust Liquids:** Many liquid sweeteners (like honey or maple syrup) require reducing the liquid in the recipe to maintain texture.\n- **Baking Powder Adjustment:** If replacing sugar with an acidic sweetener (e.g., honey or molasses), you might need to add a little baking soda to neutralize acidity.\n- **Flavor Changes:** Some substitutes, like molasses or coconut sugar, have distinct flavors that can influence the taste of your recipe.\n- **Browning:** Sugar contributes to caramelization and browning in baked goods. Some alternatives may yield lighter-colored results.\n\nBy trying out different substitutes, you can find what works best for your recipes!",

"refusal": null,

"role": "assistant"

}

}

],

"created": 1742602230,

"id": "chatcmpl-BDgjWjEboQ0z6r58pvSBgH842JbB2",

"model": "gpt-4o-2024-11-20",

"object": "chat.completion",

"prompt_filter_results": [

{

"prompt_index": 0,

"content_filter_results": {

"custom_blocklists": {

"filtered": false

},

"hate": {

"filtered": false,

"severity": "safe"

},

"jailbreak": {

"filtered": false,

"detected": false

},

"self_harm": {

"filtered": false,

"severity": "safe"

},

"sexual": {

"filtered": false,

"severity": "safe"

},

"violence": {

"filtered": false,

"severity": "safe"

}

}

}

],

"system_fingerprint": "fp_a42ed5ff0c",

"usage": {

"completion_tokens": 836,

"completion_tokens_details": {

"accepted_prediction_tokens": 0,

"audio_tokens": 0,

"reasoning_tokens": 0,

"rejected_prediction_tokens": 0

},

"prompt_tokens": 60,

"prompt_tokens_details": {

"audio_tokens": 0,

"cached_tokens": 0

},

"total_tokens": 896

}

}

Remarks

You can skip the ConvertTo-Json step if you want to see the raw output.

./quickstart.ps1

The output looks like this:

choices : {@{content_filter_results=; finish_reason=stop; index=0; logprobs=; message=}}

created : 1742602727

id : chatcmpl-BDgrX0BF38mZuszFeyU1NKZSiRpSX

model : gpt-4o-2024-11-20

object : chat.completion

prompt_filter_results : {@{prompt_index=0; content_filter_results=}}

system_fingerprint : fp_b705f0c291

usage : @{completion_tokens=944; completion_tokens_details=; prompt_tokens=60; prompt_tokens_details=; total_tokens=1004}

You can edit the contents of the powershell.ps1 script to return the entire object or a specific property. For example, to return the text returned, you can replace the last line of the script (return $response) with the following:

return $response.choices.message.content

Then run the script again.

./quickstart.ps1

The output looks like this:

There are several ingredients that can be used as substitutes for sugar, depending on the recipe and your dietary preferences. Here are some popular options:

---

### **Natural Sweeteners**

1. **Maple Syrup**

- Flavor: Rich and slightly caramel-like.

- Use: Works well in baking, sauces, oatmeal, and beverages.

- Substitution: Replace sugar in a 1:1 ratio but reduce the liquid in your recipe by about 3 tablespoons per cup of maple syrup.

2. **Agave Nectar**

- Flavor: Mildly sweet, less pronounced than honey.

- Use: Good for beverages, desserts, and dressings.

- Substitution: Use about 2/3 cup of agave nectar for every 1 cup of sugar, and reduce other liquids slightly.

3. **Molasses**

- Flavor: Strong, earthy, and slightly bitter.

- Use: Perfect for gingerbread, cookies, and marinades.

- Substitution: Replace sugar in equal amounts, but adjust for the strong flavor.

4. **Date Paste**

- Flavor: Naturally sweet with hints of caramel.

- Use: Works well in energy bars, smoothies, or baking recipes.

- Substitution: Blend pitted dates with water to create paste (about 1:1 ratio). Use equal amounts in recipes.

5. **Coconut Sugar**

- Flavor: Similar to brown sugar, mildly caramel-like.

- Use: Excellent for baking.

- Substitution: Replace sugar in a 1:1 ratio.

---

### **Low-Calorie Sweeteners**

1. **Stevia**

- Flavor: Very sweet but can have a slightly bitter aftertaste.

- Use: Works in beverages, desserts, and some baked goods.

- Substitution: Use less—around 1 teaspoon of liquid stevia or 1/2 teaspoon stevia powder for 1 cup of sugar. Check the package for exact conversion.

2. **Erythritol**

- Flavor: Similar to sugar but less sweet.

- Use: Perfect for baked goods and beverages.

- Substitution: Replace sugar using a 1:1 ratio, though you may need to adjust for less sweetness.

3. **Xylitol**

- Flavor: Similar to sugar.

- Use: Great for baking or cooking but avoid using it for recipes requiring caramelization.

- Substitution: Use a 1:1 ratio.

---

### **Fruit-Based Sweeteners**

1. **Mashed Bananas**

- Flavor: Sweet with a fruity note.

- Use: Great for muffins, cakes, and pancakes.

- Substitution: Use 1 cup mashed banana for 1 cup sugar, but reduce liquid slightly in the recipe.

2. **Applesauce**

- Flavor: Mildly sweet.

- Use: Excellent for baked goods like muffins or cookies.

- Substitution: Replace sugar 1:1, but reduce other liquids slightly.

3. **Fruit Juice Concentrates**

- Flavor: Sweet with fruity undertones.

- Use: Works well in marinades, sauces, and desserts.

- Substitution: Use equal amounts, but adjust liquid content.

---

### **Minimal-Processing Sugars**

1. **Raw Honey**

- Flavor: Sweet with floral undertones.

- Use: Good for baked goods and beverages.

- Substitution: Replace sugar in a 1:1 ratio, but reduce other liquids slightly.

2. **Brown Rice Syrup**

- Flavor: Mildly sweet with a hint of nuttiness.

- Use: Suitable for baked goods and granola bars.

- Substitution: Use 1-1/4 cups of syrup for 1 cup of sugar, and decrease liquid in the recipe.

---

### Tips for Substitution:

- Adjust for sweetness: Some substitutes are sweeter or less sweet than sugar, so amounts may need tweaking.

- Baking considerations: Sugar affects texture, browning, and moisture. If you replace it, you may need to experiment to get the desired result.

- Liquid adjustments: Many natural sweeteners are liquid, so you’ll often need to reduce the amount of liquid in your recipe.

Would you like help deciding the best substitute for a specific recipe?

Understanding the message structure

The GPT-4 models are optimized to work with inputs formatted as a conversation. The messages variable passes an array of dictionaries with different roles in the conversation delineated by system, user, and assistant. The system message can be used to prime the model by including context or instructions on how the model should respond.

The GPT-4 how-to guide provides an in-depth introduction into the options for communicating with these models.

Clean up resources

If you want to clean up and remove an Azure OpenAI resource, you can delete the resource. Before deleting the resource, you must first delete any deployed models.

Next steps

- Learn more about how to work with the GPT-4 models with our how-to guide.

- For more examples, check out the Azure OpenAI Samples GitHub repository